If your CISO is still trying to block ChatGPT at the firewall, they have already lost. They lost about eighteen months ago, to be precise. The gap between your boardroom perception and operational reality is now unbridgeable by policy alone. While 96% of executives admit their governance structures lag behind implementation, 75% of knowledge workers are already using AI at work. But the most damning statistic isn’t the volume; it is the account type. 73.8% of workplace GenAI accounts are personal, not corporate.

If your company is part of that group, your workforce is currently running a massive, unpaid R&D lab on company time. They are routing your intellectual property into consumer-grade models with zero data sovereignty. Your “ban” has not stopped the data flow; it has simply forced it onto 5G networks and personal devices where you cannot see it.

The question isn’t whether you have Shadow AI. The question is whether you are harvesting its value or maximising your liability by pretending it doesn’t exist and can be managed by policies alone.

Accumulating “Toxic Assets”#

The corporate firewall, once the symbol of IT control, is now merely an inconvenience. Usage frequency of GenAI tools has grown 61x in the last 24 months. As a result, nearly 15% of all employee prompts now contain sensitive or proprietary data.

When employees use the free tier of ChatGPT, Claude, or Gemini to do their jobs, they are not just breaking a policy; they are creating legal risks and liabilities for your organisation.

It is a concrete legal failure in three dimensions:

1. The GDPR “Right to Erasure” Trap (Article 17)#

In traditional IT, if you store PII (Personally Identifiable Information) without a proper consent, you delete the database row. Problem solved.

With GenAI, when an employee pastes a customer name into a consumer model, that data is probabilistically encoded into the model’s weights. It cannot be “deleted” without retraining the entire model—a process costing millions. If a customer exercises their Right to Erasure, you are technically incapable of complying. You are permanently in breach.

2. The “Shadow HR” Liability (EU AI Act)#

Consider an HR manager who, frustrated with internal delays, uses a free LLM to summarise and rank CVs. Under Annex III of the EU AI Act, recruitment algorithms are classified as High-Risk AI Systems (the EU regulation for High-Risk systems is currently postponed, and will probably come into life sometime in 2027)

By using a shadow tool, your firm has inadvertently deployed a High-Risk system without a Conformity Assessment, without a Quality Management System, and without registration. The liability for this falls on you, the deployer. The potential fine will be up to up to €15m or 3% of global turnover once the law comes into force.

3. Data Sovereignty Failure#

Free tiers almost universally route inference to US servers by default. Your data is leaving the EEA without a valid transfer mechanism, violating Schrems II. For example OpenAI notes that data residency controls are an enterprise feature, not a consumer one — which requires at least 150 seats.

Why You Should Not Ban It outright#

You cannot ban this technology because the utility is simply too high. Your employees are rational actors; they use these tools because they work. The Harvard Business School / BCG “Jagged Frontier” study quantified exactly what you are losing by enforcing a ban:

Consultants using AI completed 12.2% more tasks.

They worked 25.1% faster.

Their output quality was 40% higher.

Crucially, the benefit was highest for junior staff, who saw a 43% performance increase.

This acts as a massive equaliser. Shadow AI users are effectively training themselves to be “centaurs”—humans integrated with AI—without corporate guidance. Banning this is risk management, but also a voluntary decision to reduce your workforce’s competitiveness by a quarter.

Protocol “Amnesty & Pave”#

The solution is not to double down on prohibition. It is to construct a “Paved Road”—a path of least resistance that is safer and easier than the shadow alternative.

Phase 1: The Amnesty (Cultural Reset)#

You need to clear the “compliance debt” immediately.

Have your CEO (not the CISO) declare a 30-day “Safe Harbour” window. The message must be explicit: “We know you use these tools to be productive. We want to enable that innovation safely. Share your tools and use cases now, and we’ll provide safe, compliant enterprise tools, and there will be no disciplinary action for past usage.”

This converts a hidden liability into a visible asset map. You will discover “Shadow Agents”—workflows automating SQL queries, legal summaries, or code refactoring—that IT didn’t even know were necessary. This is your unpaid R&D.

Of course, this is not a complete solution for AI-powered process automation—this is not building a motorway, just paving well-trodden paths. But you have to start somewhere.

Phase 2: The Pave (AI Gateway Architecture)#

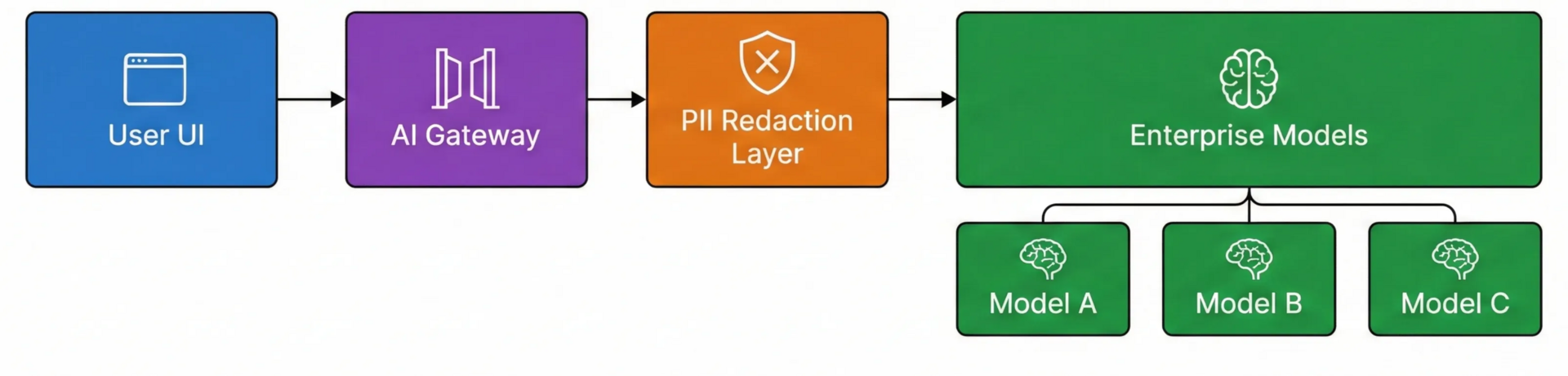

Do not give employees direct API keys. Do not simply whitelist openai.com. You must insert a control layer. The recommended architectural pattern is the Enterprise AI Gateway (utilising tools like LiteLLM, Cloudflare, or Kong).

The Architecture:

This architecture solves the legal problems without killing the productivity:

Unified API Surface: Employees access a single internal endpoint. The Gateway handles the routing to Azure OpenAI, Bedrock, or Vertex. This prevents vendor lock-in, allows for cost control, centralised prompt management, routing the requests to best tools.

The Data Firewall (Redaction): This is the critical component. Use tools within the gateway to detect and tokenize PII (credit cards, names, emails) before the prompt leaves your perimeter. The model never sees the sensitive data, neutralising the GDPR risk. There are tools that provide masking of PII in audio content as well.

Immutable Audit Logs: Every prompt and completion is logged asynchronously to your SIEM (e.g., Splunk). This provides the mandatory “record keeping” required by Article 12 of the EU AI Act.

⠀

Phase 3: The Swap#

Once the Gateway is live, you execute the swap.

Sanction: Low-risk, high-value use cases get immediate access to the Gateway.

Switch: Users on risky consumer tools are migrated to the Enterprise instance.

Stop: Only then do you block the residual high-risk, low-value tools.

Security vendors sell you blockers—network tools that create the illusion of control while employees route around them. The objective is to build pathways. The Amnesty & Pave protocol captures the productivity gains while mathematically eliminating the data sovereignty risk.

Additionally, deploying AI model access as a company-wide solution enables those who previously refrained from using these tools—because they followed security policies—to finally use them, while also spreading AI knowledge throughout the organisation.

The Briefing#

The Bill for the “Oversight Gap” Has Arrived#

IBM’s Cost of a Data Breach Report 2025 puts a price tag on our collective negligence: USD 670,000. That is the average additional cost of a data breach for organisations with high levels of Shadow AI compared to those without.

The report identifies a critical “AI Oversight Gap.” While adoption accelerates, governance has stalled. A staggering 63% of breached organisations lacked any AI governance policy, and 97% of AI-related breaches involved systems with improper access controls. We are deploying faster than we are securing.

Crucially, the report highlights a bifurcated reality. AI is both the poison and the antidote. While Shadow AI acts as a cost multiplier, organisations that extensively used AI for security (detection and response) saved USD 1.9 million per breach and identified threats 80 days faster.

The message is clear: AI is not an optional layer you can ignore. Used defensively, it is your strongest shield; left ungoverned in the shadows, it is an open chequebook for attackers.

It happens in the real world too#

A recent incident discussed in cybersecurity circles confirms that it’s not a theroetical risk mentioned in consultancy reports just to generate leads. A CISO reported a “blood-boiling” discovery: a junior developer, struggling to debug a SQL query, copy-pasted 200+ customer records (including emails and phone numbers) directly into ChatGPT.

The developer wasn’t malicious; he was just trying to do his job. The frightening part? The CISO only caught it by physically walking past the screen. The DLP system (designed for email attachments) was completely blind to the browser-based paste.

This is the “invisible factory” in action. The employee solved his problem in seconds, but in doing so, he handed the company’s database blueprint and PII to a public model. No policy prevented it. No firewall stopped it. Only a “Paved Road” architecture with browser-level redaction could have saved them.

Conclusion — the Monday Morning Question#

In your next security or compliance review, ask your CISO or Data Protection Officer this single question:

“Given that we cannot legally remove data from OpenAI’s model weights, do we have a technical method to prove that no employee pasted a customer list into ChatGPT Free six months ago?”

If they cannot answer this definitively, your prohibition policy is not a control—it is a comfort blanket. Your organisation is currently accumulating regulatory exposure that you cannot see and may not be able to remediate.

If you are ready to stop fighting the tide and start governing it, let’s talk on how we can safely audit your workforce’s AI usage without triggering a panic.

Until next time, build with foresight.

Krzysztof